On January 21, the International Conference on Learning Representations (ICLR 2023) announced the acceptance results, and a paper from Prof. Fan Zhang's team in our institute was accepted. The paper makes an innovative contribution to the field of visual image data augmentation.

Paper information: Qihao Zhao, Yangyu Huang, Wei Hu, Fan Zhang, Jun Liu,“MixPro: Data Augmentation with MaskMix and Progressive Attention Labeling for Vision Transformer (International Conference on Learning Representations 2023) ”

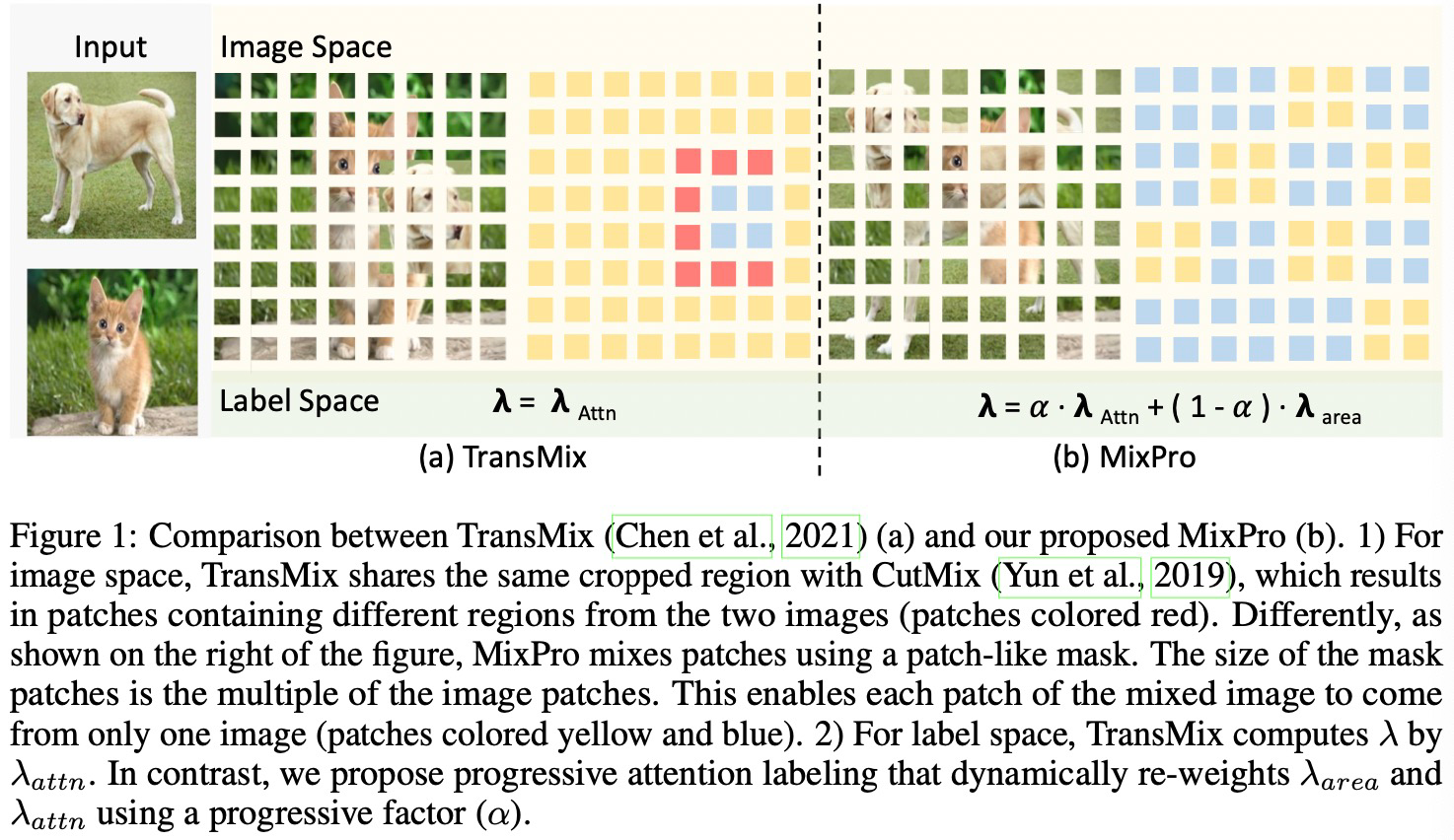

Abstract: The recently proposed data augmentation TransMix employs attention labels to help visual transformers (ViT) achieve better robustness and performance. However, TransMix is deficient in two aspects: 1) The image cropping method of TransMix may not be suitable for vision transformer. 2) At the early stage of training, the model produces unreliable attention maps. TransMix uses unreliable attention maps to compute mixed attention labels that can affect the model. To address the aforementioned issues, we propose MaskMix and Progressive Attention Labeling (PAL) in image and label space, respectively. In detail, from the perspective of image space, we design MaskMix, which mixes two images based on a patch-like grid mask. In particular, the size of each mask patch is adjustable and is a multiple of the image patch size, which ensures each image patch comes from only one image and contains more global contents. From the perspective of label space, we design PAL, which utilizes a progressive factor to dynamically re-weight the attention weights of the mixed attention label. Finally, we combine MaskMix and Progressive Attention Labeling as our new data augmentation method, named MixPro. The experimental results show that our method can improve various ViT-based models at scales on ImageNet classification (73.8% top-1 accuracy based on DeiT-T for 300 epochs). After being pre-trained with MixPro on ImageNet, the ViT-based models also demonstrate better transferability to semantic segmentation, object detection, and instance segmentation. Furthermore, compared to TransMix, MixPro also shows stronger robustness on several benchmarks.

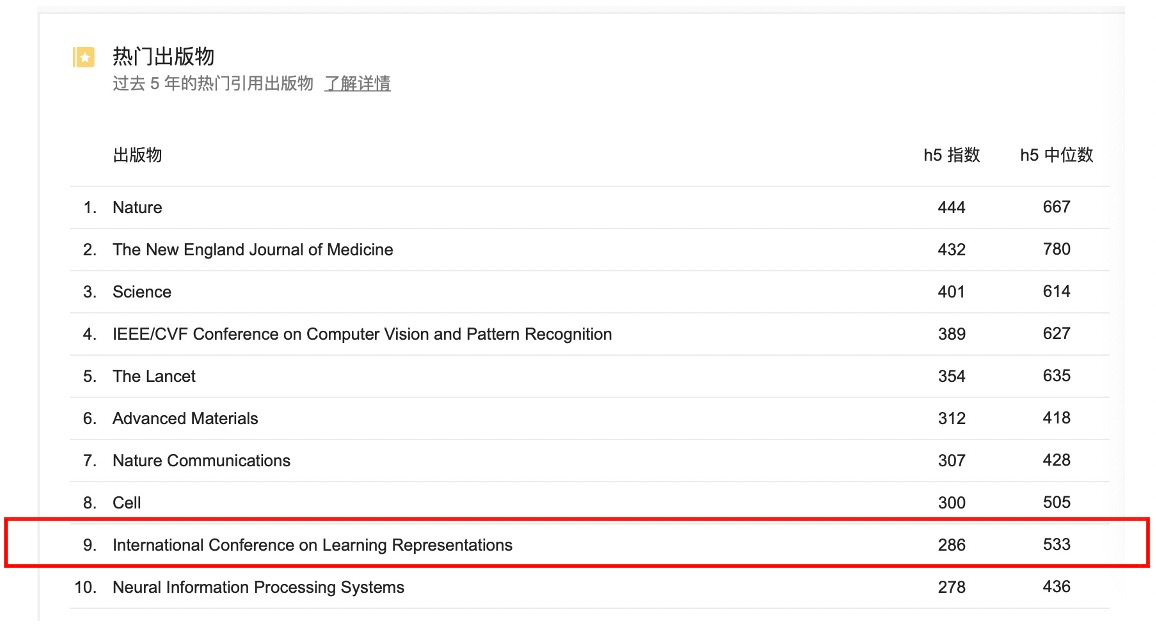

The International Conference on Learning Representations (ICLR), the top conference in artificial intelligence, will be held in Kigali, the capital of Rwanda, from May 1-5. ICLR is ranked 9th among all disciplinary journals and conferences in the 2022 Google Scholar ranking of academic journal conference impact, second only to IEEE CVPR among journal conferences in computer disciplines.

The first author of the paper is Qihao Zhao, a PhD student in the School of Information Science and Technology, supervised by Prof. Fan Zhang and Associate Prof. Wei Hu, and completed in collaboration with Microsoft Research Asia and Singapore University of Technology and Design, with Beijing University of Chemical Technology as the first completion unit. The work was funded by the National Natural Science Foundation of China and other projects.