Su Yuhang, a master's student of the class of 2022 from the College of Information Science and Technology at Beijing University of Chemical Technology, has once again made a breakthrough in the field of music intelligent retrieval. Following the publication of the paper on the audio retrieval method AMG-Embedding as the first author at the CCF-A level conference ACM Multimedia 2024, his latest research achievement, MIDI-Zero, has been accepted by the CCF-A level conference ACM SIGIR 2025. Publishing papers consecutively at international top academic conferences within just one year demonstrates the outstanding scientific research and innovation ability of Su Yuhang, as well as the scientific research strength and talent cultivation level of the College of Information Science and Technology in the field of AI music retrieval. The supervisors of both papers are Associate Professor Hu Wei and Professor Zhang Fan.

MIDI-Zero:A MIDI-driven Self-Supervised Learning Approach for Music Retrieval

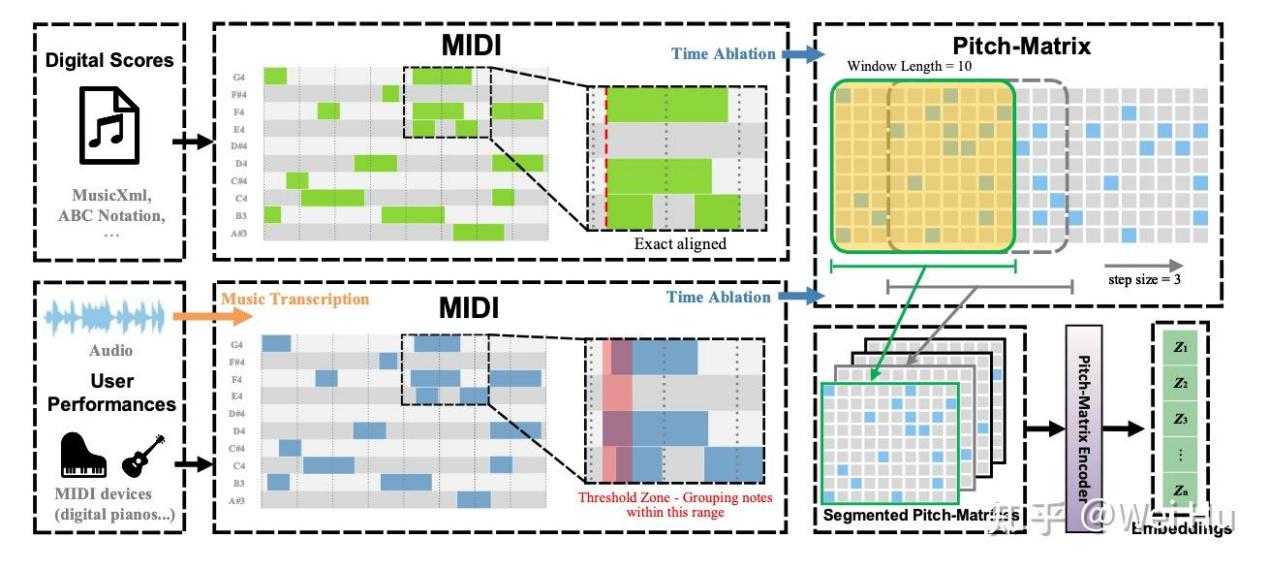

MIDI-Zero is a brand-new self-supervised learning framework that focuses on music content retrieval and covers core subtasks such as audio recognition, audio matching, and version identification. Unlike traditional methods that rely on audio signals or spectrograms for feature extraction, MIDI-Zero operates entirely based on MIDI representation. Its most remarkable feature is that it does not require external training data. All training data is automatically generated according to predefined task rules, completely getting rid of the dependence on labeled datasets or external music libraries. MIDI-Zero is not only applicable to symbolic music data but can also seamlessly handle audio tasks through a music transcription model. Numerous experiments have shown that MIDI-Zero has achieved excellent performance in multiple CBMR subtasks. This innovative method simplifies the feature extraction process, successfully bridges the gap between audio and symbolic music representation, and provides a flexible and efficient solution for music retrieval.

AMG-Embedding:a Self-Supervised Embedding Approach for Audio Identification

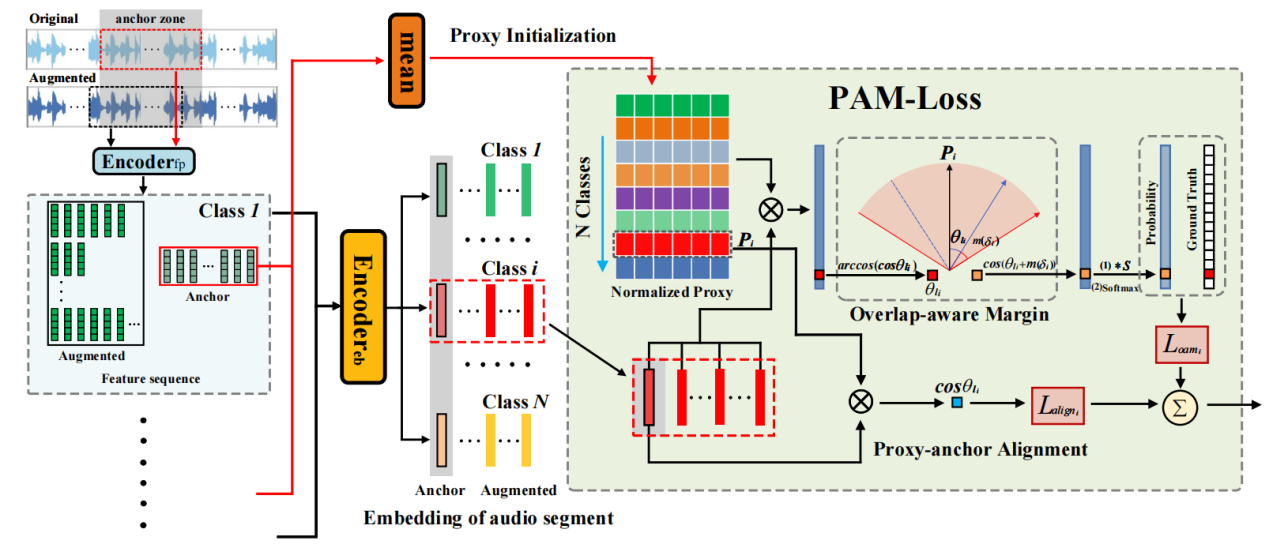

AMG-Embedding focuses on the audio retrieval task, aiming to accurately retrieve completely matching content from a massive music library through short audio clips. Traditional fingerprinting methods rely on the features of a large number of short-term fixed overlapping clips, resulting in high storage and computational costs. In contrast, AMG-Embedding transforms variable-duration non-overlapping clips into efficient embedding representations through self-supervised learning and a two-stage embedding process, thus changing the traditional paradigm. Experimental results show that while maintaining a retrieval accuracy comparable to that of traditional fingerprinting methods, AMG-Embedding reduces the storage requirements and retrieval time to less than one-tenth of those of traditional methods. This breakthrough significantly improves the scalability and efficiency of audio retrieval systems.

As A-level conferences recognized by the China Computer Federation (CCF), ACM SIGIR and ACM Multimedia represent the highest international academic standards in the fields of information retrieval and multimedia. The research team's consecutive publications of achievements at these two top conferences within just one year demonstrate their profound technical accumulation and academic leadership in the field of AI music retrieval.